Did you catch the recent NIST Cyber AI Workshop #2?

NIST and the NCCoE hosted a full-day hybrid workshop on January 14th to discuss the preliminary draft of the Cybersecurity AI Profile and provide updates on SP 800-53 Control Overlays for Securing AI Systems (COSAiS).

If you missed it, I've got you covered. Here are my notes on what you need to know.

TL;DR

NIST's new Cybersecurity Framework AI Profile addresses three critical areas: Secure (protecting AI systems), Defend (using AI for cyber defense), and Thwart (countering AI-enabled attacks)

No guardrails are universally robust against adversarial AI attacks -this is a “mathematical certainty”, not theoretical

Agentic AI exponentially increases attack surface compared to static chatbots

The guidance extends existing frameworks (CSF 2.0, SP 800-53) -you don't need to reinvent the wheel

Dioptra testing platform available on GitHub for reproducible AI security evaluations

Join the NIST Overlays for Securing AI Systems Slack channel to collaborate directly with NIST investigators on control overlay development

HIGHLIGHTS

NIST Cybersecurity AI Profile: What Every Security Professional Needs to Know

CISOs are drowning in AI guidance while leadership demands to know "what are we doing about AI?" Sound familiar?

NIST just hosted their second public workshop on the Cybersecurity AI Profile -a collaborative framework designed to cut through the noise and give security teams actionable guidance. With 7,500+ practitioners in the community of interest and 2,500+ document downloads, this isn't just another framework gathering dust on a shelf.

Here's what matters for your security program.

The Three Focus Areas That Define AI Cybersecurity

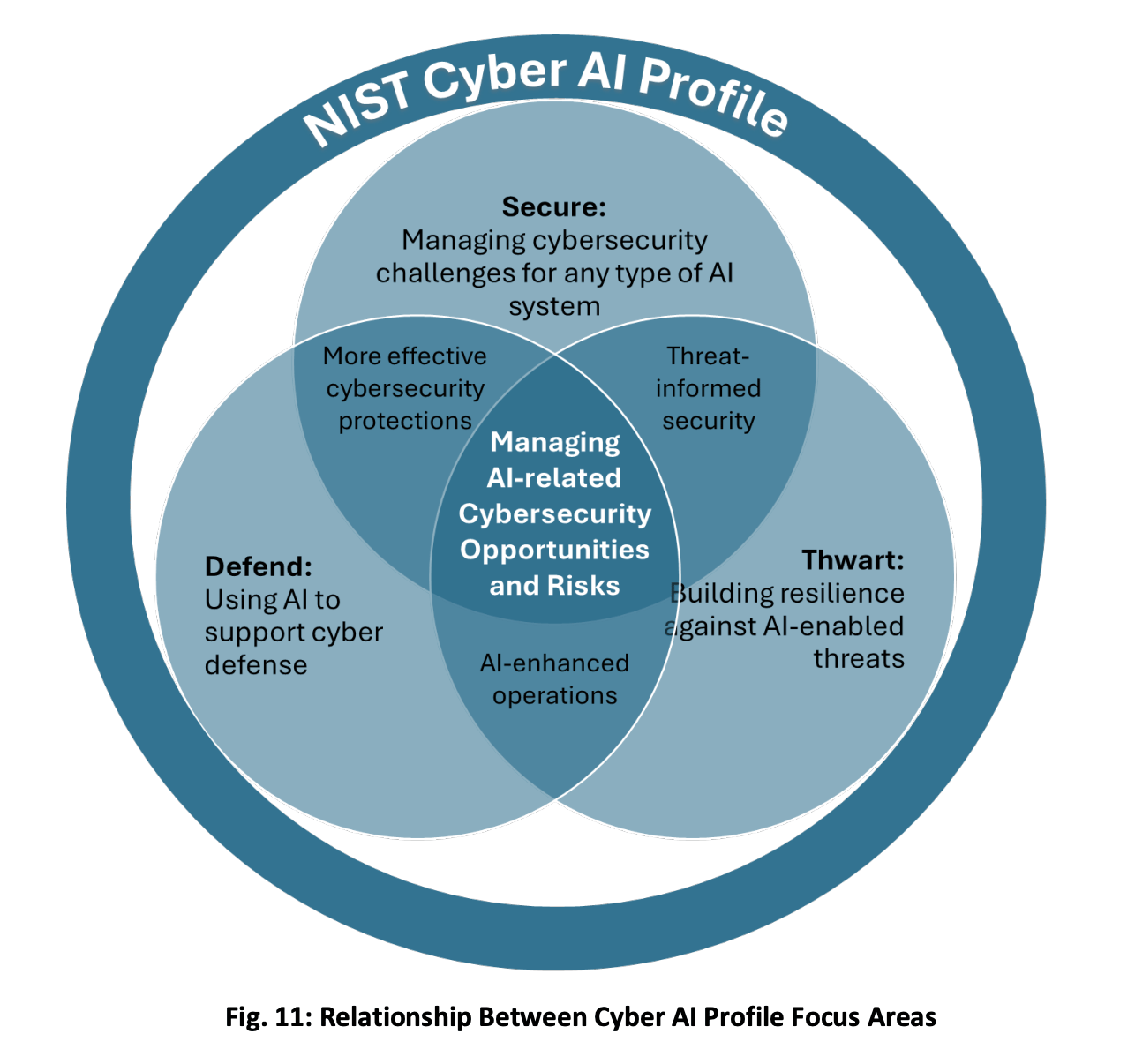

NIST structured the profile around three focus areas that cover the full spectrum of AI-related security challenges:

1. SECURE – Protecting AI systems themselves, their supply chains, data pipelines, and ML infrastructure from attack.

2. DEFEND – Using AI to enhance your cybersecurity operations -threat detection, analysis, response, and remediation.

3. THWART – Countering adversary use of AI-enabled attacks against your organization.

"Even if you're not using AI, AI is affecting your life."

That last point is critical. Whether you've adopted AI or not, you're already facing an AI-changed threat landscape.

TAKEAWAY

The Cybersecurity AI Profile builds on CSF 2.0's 6 functions, 22 categories, and 106 subcategories -adapted for AI contexts. Don’t reinvent the wheel. Map your AI considerations to what already works.

📚 Free resource: NIST Cybersecurity AI Profile Preliminary Draft

Some Prompts Will Always Bypass Security Measures

Here's what NIST's adversarial ML research team confirmed -and it's not what vendors want you to hear:

There are NO guardrails that are universally robust against adversarial attacks.

This isn't speculation. It's a mathematical statement. Some prompts will always bypass security measures.

You cannot escape gravity, as we say.

A new LLM is being released, and within days, security researchers report successful attacks against it.

What AI-Enabled Attacks Look Like Today

Spear phishing on steroids – AI dramatically improves attackers' ability to gather information and craft highly tailored, convincing emails

Voice and video spoofing – If you're still using voice or video for identity verification, stop. It's no longer reliable

Speed advantage – AI-enabled attacks fundamentally change operational tempo

👉 Pro Tip: Accept that static defenses will fail. Implement continuous guardrail updates and regular in-house red teaming. This is an arms race, not a one-time implementation.

📚 Free resource: NIST AI 100-2 E2025: Adversarial Machine Learning Taxonomy

TAKEAWAY

Train your entire enterprise on AI-enabled attack methods -not just security teams. The threat landscape has fundamentally shifted.

SP 800-53 Control Overlays for Securing AI Systems (COSAiS)

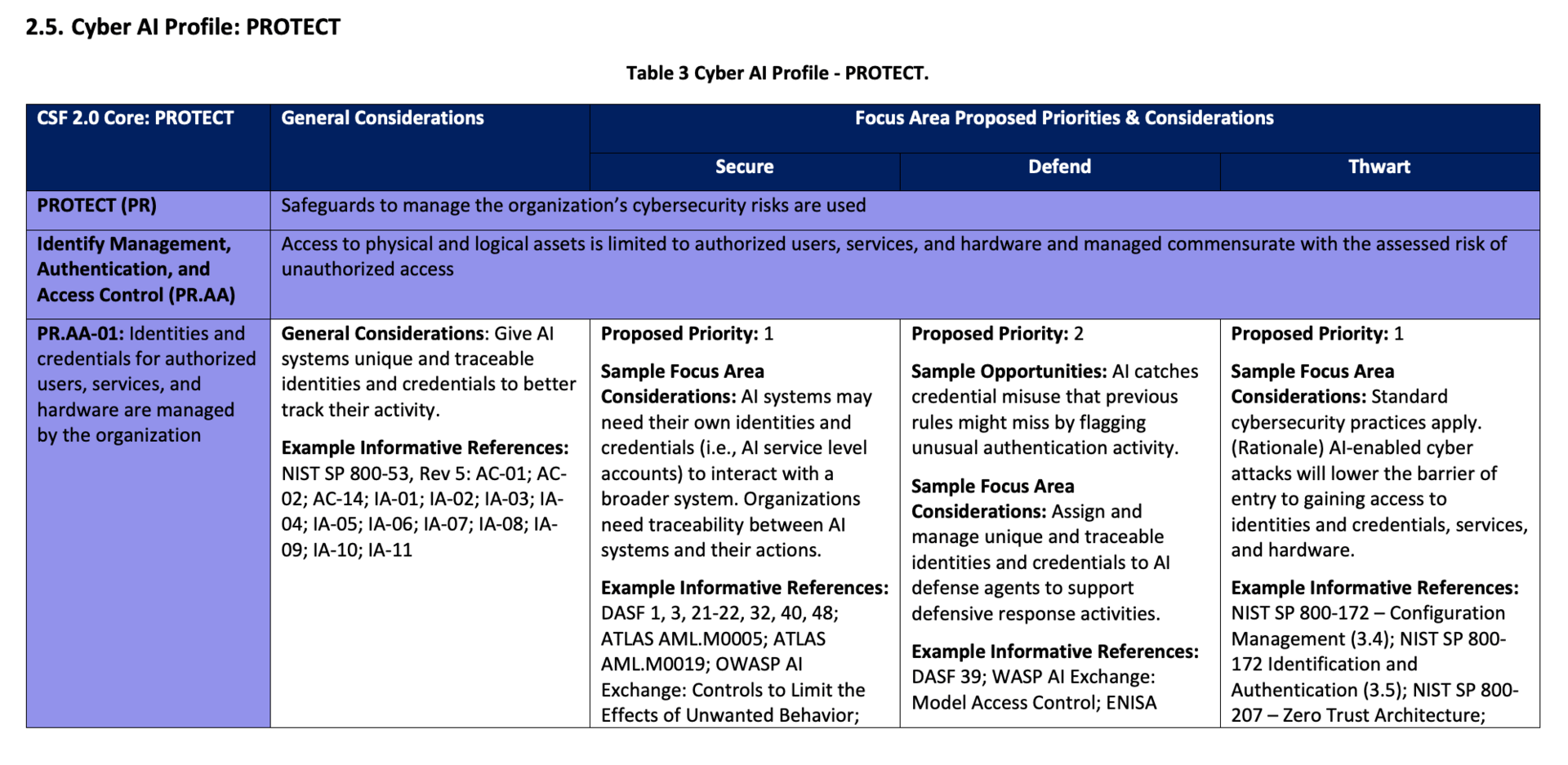

NIST is developing control overlays that build on existing SP 800-53 controls rather than creating entirely new frameworks.

"AI systems in many ways are just smart software, fancy software with a little bit extra."

What This Means for You

The overlays provide pick-and-choose building blocks for:

Predictive AI implementations

Generative AI deployments

Developer security controls

Agentic AI systems

The Multi-Volume Approach

NIST is planning internal reports covering:

Using and fine-tuning predictive AI

Generative AI security controls

Developer-focused guidance

Agentic AI considerations

TAKEAWAY

Don't attempt to implement comprehensive control catalogs. Select what's relevant to your specific AI implementations. Use lightweight overlay building blocks tailored to your use cases.

📧 Submit comments: [email protected]

💬 Join the conversation: NIST Overlays for Securing AI Systems Slack channel - get updates, engage with NIST investigators, and help shape the overlays

Agentic AI: The Attack Surface Multiplier

This is where things get serious. Agentic AI doesn't just introduce new risks -it exponentially increases your attack surface compared to static chatbots.

Why? Because agents take actions across your enterprise APIs.

Critical Questions You Need to Answer

Accountability – How do you bind individual accountability to semi-autonomous agent actions?

Delegation chains – How do you manage trust when agents can create other agents?

Audit trails – How do you audit actions taken autonomously?

Data aggregation – When AI summarizes data, sensitivity levels can change. Are you tracking this?

"AI systems are black boxes - that's true for the larger deep neural networks for which we don't have really good methodologies to understand what you're actually going to get until you run it through the network."

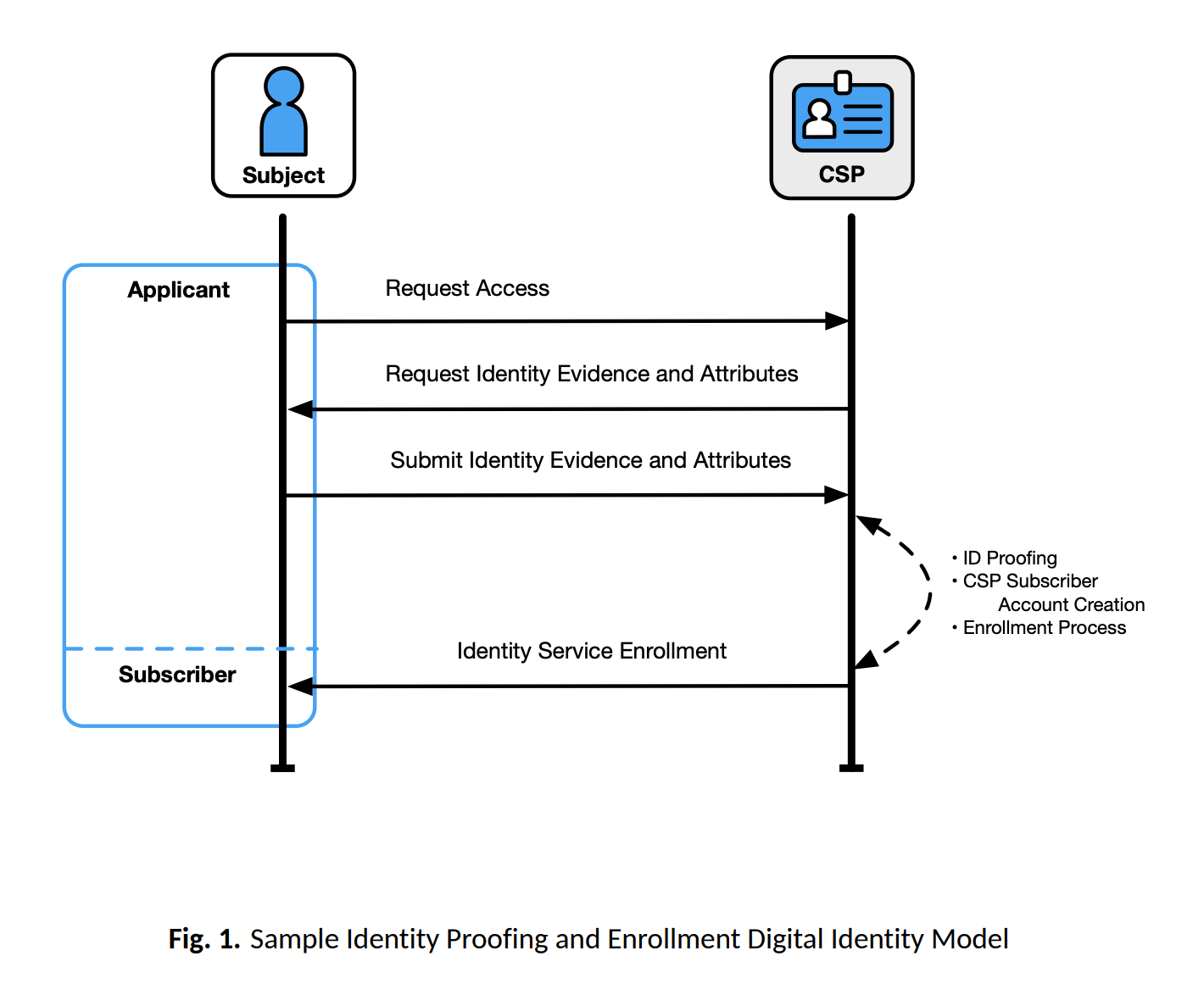

Identity Management for AI Agents

The shift from human-centric authentication to agent identity management is fundamental.

Key standards to watch:

OAuth 2.0/2.1 – Authentication standards for agent identity

SPIFFE – Machine identity framework

Model Context Protocol (MCP) – Open standard for AI agent tool integration

OWASP GenAI Security Project – Including Top 10 for Agentic Applications

OAuth and SPIFFE are production-ready but weren't designed for autonomous agents; MCP is purpose-built but nascent. Most orgs will need to layer these together rather than pick one.

TAKEAWAY

Implement human-in-the-loop controls for agent authorization, especially for sensitive data access. Establish identity management frameworks that enable accountability tracing back to responsible humans.

Validate AI model formats for trojans and malware when receiving from third parties.

OTHER NEWS

DeepSeek Model Evaluation

NIST's CAISI found DeepSeek models are more susceptible to agent hijacking, prompt injection, and jailbreaking attacks than leading US models.

📚 Read the report: CAISI Evaluation of DeepSeek AI Models

SSDF AI Profile for Generative AI

NIST SP 800-218A extends the Secure Software Development Framework specifically for generative AI systems. If you're building or deploying GenAI, this is your secure development baseline.

AI Risk Management Framework (AI RMF)

The AI RMF provides voluntary guidance for managing AI risks. It complements the Cybersecurity AI Profile and helps organizations map trustworthy AI characteristics.

Privacy Engineering

"Reducing privacy threats with privacy-enhancing technologies is predicated on a solid cybersecurity foundation."

Get your security fundamentals right before adding privacy-enhancing tech. NIST is also updating the Privacy Framework and developing a Privacy Enhancing Technologies (PETs) Testbed to benchmark these emerging tools.

Dioptra: NIST's Open-Source AI Testing Platform

Dioptra is NIST's open-source software testbed for evaluating AI system trustworthiness. The platform supports the Measure function of the AI Risk Management Framework by enabling reproducible security evaluations of AI models.

It allows organizations to test AI systems against evasion attacks, poisoning attacks, and oracle attacks while tracking all inputs and outputs. It's been in development for years and is available now on GitHub.

SO WHAT, WHAT’S NEXT?! (ACTION ITEMS)

Immediate

Assess your current state – Use community profiles to benchmark against peers

Bridge your teams – Establish shared taxonomy between AI and cybersecurity teams

Strategic

Extend, don't reinvent – Map AI considerations to existing CSF and 800-53 frameworks

Proactive defense – Continuous guardrail updates and regular red teaming

Supply chain awareness – Track data provenance and evaluate third-party AI models

Emerging Priorities

Agent identity frameworks – Start planning now for AI agent accountability

Human-in-the-loop – Maintain oversight for sensitive operations

Enterprise-wide training – AI-enabled attacks affect everyone, not just security teams

Want to learn more about NIST guidance including the Cybersecurity Framework and Risk Management? Check out my courses in Simply Cyber Academy.